Disclaimer: The views and opinions expressed in this blog are entirely my own and do not necessarily reflect the views of my current or any previous employer. This blog may also contain links to other websites or resources. I am not responsible for the content on those external sites or any changes that may occur after the publication of my posts.

End Disclaimer

There’s a great part(s) in Spiderman Into the Spiderverse, where each version of Spiderman goes through how they became Spiderman.

Basically how we got to here.

“Okay, let’s do this one more time”, but for AI.

My name is Machine Learning, and for the last, say, 70ish years, everyone’s just called me Artificial Intelligence, no matter what I do. I’m pretty sure you know the rest. I went to Dartmouth. I guessed a bunch of handwritten numbers, backpropagated my way across layers, recognized some more images, guessed some things, took some time off for a winter break, took some time off again for another winter break. I got stronger, ate more, changed my training. Beat some Go players. In 2017 some friends at Google and one intern found a way to stop doing all that exhausting “recurrent” stuff. Worked pretty well. I transformed myself. Off to the races now. You can’t get me to stop talking. So no matter how many iterations I find myself in- text, image, video, speech, I always find a way to come back. Because the only thing standing between technological stagnation is me. There’s only one Artificial Intelligence. And You’re looking at him”

*wink*

Okay I butchered this, but it was fun to think through.

The Path Towards General Artificial Intelligence, aka the Singularity, aka Skynet becomes sentient, aka “I, for one, welcome our robot overlords”, aka…

I’m not an AI doomer. Doomers espouse that we are basically all, well, doomed by the coming technological advances. AI wakes up one day, decides humans are either unnecessary, or don’t fit into their objective function and AI goes FOOM.

I don’t think that AI is coming for us to maximize paperclip output. I do think it going to be (is) a very powerful, very helpful, tool.

I think we will have a soft take-off towards AGI (Artificial General Intelligence)- iterative progression over time, even under the assumption that AI’s will be able to recursively improve themselves and that these iterations might look like jumps in the short term.

AMI (Advanced Machine Intelligence), another euphemism for AGI, sort of sounds better to me, less menacing(mon ami!) So I’ll use that.

I think we are a good while away(a decade +) from AGI/AMI. Some reasons why I think that (also I could just be wrong):

We need more new model architectures besides generative. There are some interesting, non-generative new approaches to learn more abstract representations of inputs and an environment like Meta’s Joint Embedding Predictive Architecture (JEPA)

Scaling the size of the models increases efficacy, but these returns from scaling alone may be diminishing

To account for this, we find ways to deal with what is effectively the curse of dimensionality by doing things like cutting floating point, broader quantization, LoRA, etc. Optimisation through shrinkage or increased “fuzziness”.

One tell of a new regime change towards AMI will be when we see a marked change in physical chip design and/or chip fabrication

AI is still “dumb” (Otakar ducks AI researcher-thrown tomato).

Example 1: how far away are we from the level of understanding required to have an AI infused robot completely clean a bathroom? (something that would be very useful)

Example 2: Level 5 autonomous driving has been “right around the corner” for years(decades now?). To use an example from Yann LeCun, it takes roughly 20 hours of training for a 15-16-17 year old human brain to learn how to drive a car (badly).

Without some jumps and changes in areas I’ve mentioned above like architecture, optimisation, and chip design, Hans Moravec’s paradox continues to loom large over AIs and robotics. Computers are able to do things humans find very difficult, but are terrible at many things humans do easily or with few or zero-shot learning.

Build vs. Buy AI at Your Company

Building:

What you need to do this if you are at a company and want to build:

3 most important things when choosing to build AI at your company

A leader and team who knows how to “do AI”- i.e. take a boss telling you to “do AI” and translate it into making money. I know a guy

A boss that will let you do it, tell other people “this is happening”, and give you some runway

(1-3 years if your data is good, 3-5 if not)

Some semblance (the more concrete the better) of a Data Strategy before an AI Strategy.

AI at your company is a non starter before you’ve decided how to aggregate, clean, and standardize your data.

I’ve written about what it takes and what can go wrong here.

Good AI/ML teams, once installed, will buy component pieces rather than try to build them if:

It would take unreasonably long to build

They would be remaking the wheel

They can’t build a product of that quality (e.g. big llm models)

Buying:

What you need to do this if you are at a company and want to buy:

Don’t do this if you can afford not to.

Why?

Locked into an ecosystem.

Many very new companies effectively charge you to build their product on the fly.

Do they control your data? Even after you leave them?

Black box. Trust us! It’s all fine…

You still need someone to explain the output and understand the nomenclature:

discriminative AI output example: is 95% accuracy or precision- good, bad, terrible, or bullshit?

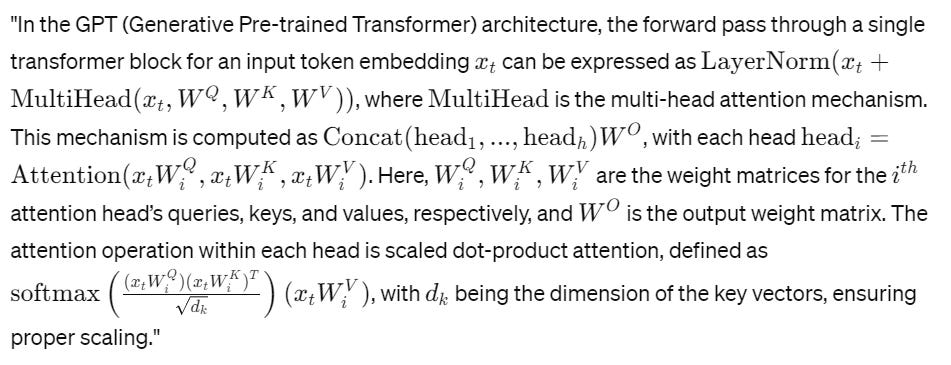

some generative AI guts :

(Robot Voice and moving arms up and down)

“Beep boop beep, look at me, I am the AI guy. ”

(Ends robot voice, stops gesticulating with arms)

Do you have someone who knows what this means and how it contributes to the efficacy of your llm, but maybe more importantly, can explain these types of things intuitively without needing big words and funky symbols.

“Hey I thought this was math, where’s all the numbers?” (Otakar dodges another tomato).

What LLMs can “Actually” do right now for companies

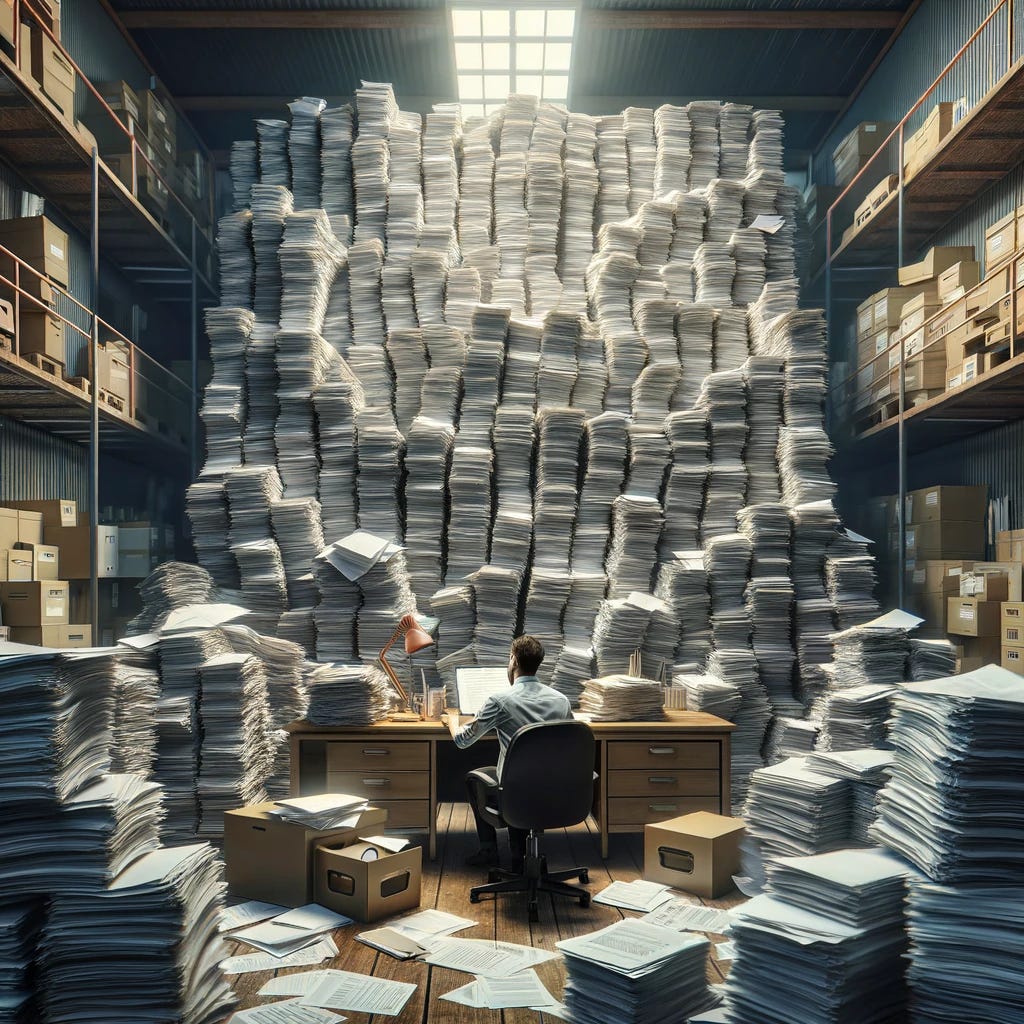

Extraction, summary, and querying documents are the name of the game right now for most in-house use cases.

Automate. Automate. Automate.

These are the areas where most companies can see an immediate, practical impact from the use of llms (large language models).

But wait! What about an llm on your own data? R.A.G(Retrieval Augmented Generation), Fine-tune, or build your own completely from scratch? Wow that’s cool, more complicated, and not the first thing I would choose to do. Remember this is for right now. These methods assume a level of data cleanliness, availability, and standardization that are likely not present at most companies across the board(come on, be honest).

So back to automating, extracting, and standardizing. (You’ll be able to fuddle over the RAG vs fine-tune conundrum in no time! You could also just wait a bit).

The stack Im talking about would be to use an api from one of the current level 4 “big one” llms- GPT, Gemini, Claude, etc, through a cloud service provider like Azure, GCP, and AWS.

If you are looking to implement llms at your company, privacy of your data is paramount, so there should be an implicit/explicit privacy assurance by using one of these three gigantic tech companies to host.

There is no 3rd party vendor on my radar currently that replaces or offers the facility and dexterity of building things by writing additional code on top of an LLM api. (Let me know if you’ve found one).

Anybody selling you soup to nuts systems that offer rebreather-like capability (ie nothing wasted, super efficient, so important it “breathes for you”) in a closed loop are at the moment, let’s call it, greatly exaggerating, the capabilities of what they are selling.

Empirically so far what I’ve found:

Extract, Summarize, query, and compare pretty much anything from pdfs, excel, email body, word docs. There are still some wrinkles and edge cases for each of these types of documents, but they are getting smoothed out and worked around.

These capabilities encompass a ton of use cases, predominantly automation, aggregation, and triage of incoming data.

There’s also going to be an extra trader alpha bonus coming out of this- new and interesting, and before now uncollected data sets.

That thing that was sent to you that you wished you could remove and save for later to analyse? The days of someone copy pasting an excel bitmap into word so that its conveniently inaccessible are over.

What about AI Agents?

The idea behind AI agents is that you have a bunch of semiautonomous or fully autonomous AIs/programs that are able to build out complex projects for you from start to finish.

One distinguishing element of generative AIs (when compared to discriminative AIs) is that they are are already able to make things- ”Write me a paper on ”, “ Construct a poem”, “Give me ten new song titles for the band ‘A Cadre of Brilliant Assholes’ ”.

Many current purported full agent systems are stacks of llms in disguise. That’s fine-sh for now as long as you don’t run out and buy any for your company. This is a transitory phase that will blow over into the real thing.

And the real thing may be coming sooner rather than later.

Cognition AI came out of their “stealth mode”(worst startup term for “still figuring out what we are going to build”) to launch Devin, the “first AI software engineer”, by their account capable of building complete apps end to end, autonomously fine-tuning AI models, as well as doing jobs listed on Upwork.

It’s not that Devin will be the right agent system, or that it even works, but that it heralds what it coming down the pike.

AI Agents are a next step in chatbots, moving closer to having an AI assistant or AI staff working for you round the clock.

GPT-5- Im looking at you. This summer…?

I’ll keep updating these as things change. This was fun(at least for me).

Let’s do this again sometime.

Anything else I’m forgetting right now?

Ah, yes.

That’s right.

Thanks for reminding me.

“Okay, let’s do this one more time”.

On to the next one.

Don’t slow down.

hahaha , good one. Wish I included that joke. The set up was right there and I whiffed.

Nice work. Strangely, it takes a lot more than 20 hours to train a teenager to clean their bathroom.