“We’ll call it AI to Sell it, Machine Learning to Build it”

An AI Public Service Announcement

Start Disclaimer: The views and opinions expressed in this blog are entirely my own and do not necessarily reflect the views of my current or any previous employer. The information shared is for informational and discussion purposes only. Any reliance on the information provided in this blog is at your own risk. I do not make any representations or warranties regarding the accuracy, completeness, or suitability of the information.

This blog may also contain links to other websites or resources. I am not responsible for the content on those external sites or any changes that may occur after the publication of my posts.

End Disclaimer

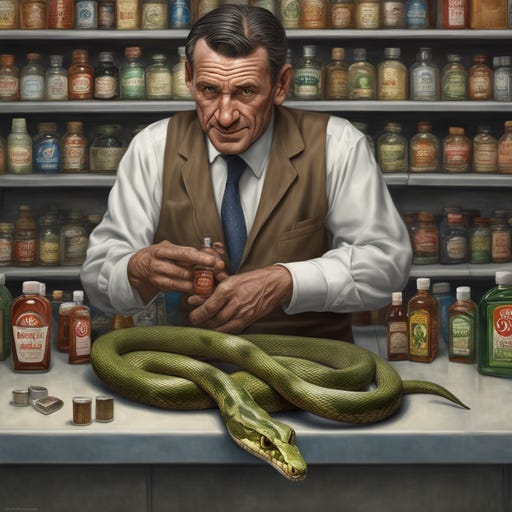

“Step right up and take a peek, I have a Grade-A, bon-a-fide AI product right here. Good for what ails you! Powered by AI as they like to say! Harness the power of computers with Artificial Intelligence! What's that you say? How’s it work? Well, why, this is AI for ‘x’ right here. Guaranteed to solve your problems, grow back your follicles, even fix that bout of gout in your big toe! It is the future after all. The Singularity and such, believe they call that A-G-I.”

Wait, what are you doing? No, no, no, don’t peek behind the curtain there, nothing to see, wait, stop, no!”

So genAI is here. Ask anything, learn anything, produce any image, any video. Remember how big it was when AlphaGo beat the world in 2016? I do. It was awesome. Well, beating Go, as impressive as that is, is a constrained (albeit very difficult) machine intelligence application( Demis, don’t unfriend me!) A year later we got the Transformers paper. In 2023, it took ChatGPT two months to acquire 100 million users. This is only the beginning. As philosopher David Friedman says, “Today’s AI is the worst you will ever use.” GenAI is already pretty amazing, and OpenAI has been casually dropping big improvements every couple of weeks. So that’s a lot of real coolness mixed with a lot of hype, and people will take advantage of that hype.

In the run up to Uber’s IPO in 2019, venture capital funds were flooded with pitches from startups offering “Uber for X”. Uber for parking spaces. Uber for home cleaning. Some succeeded. More failed. There’s a lot of “AI for x” sales pitches coming. Some will be real. More won’t be- especially given the visibility and zeitgeist around GPT. The paint isn't even dry yet on the “crypto-expert-turned-AI-expert” quick change emanating from crypto's most recent FTX-induced winter.

I’ve had salespeople pitch me their AI expertise only to find the algorithms in their pitchbooks mislabeled. I’ve been told that a product was “driven by AI” only to find out it was driven by “if-then” statements. I’ve been told that documents and disparate knowledge could be stripped and stitched back together again using the finest in NLP technology, only to find out that the tech that stitched it together again were human hands and keystrokes, in between cigarette breaks, at an office building in Southeast Asia.

So, who cares? I get it. Everybody’s got to make a living. Why does this bother me so much? Cause it’s not telling the truth and it's taking advantage of people. Using big AI words to hide the truth and claim expertise. Richard Feynman (always need a Feynman anecdote) talks about knowing that “names don’t constitute knowledge”. Purporting to know the name of the thing is not knowing the thing. Selling the name of the thing is not always selling the thing.

So be cautious when a salesperson comes knocking on your door with an “AI solution” that fixes everything. Anyone I ever worked with who actually builds things calls it machine learning anyway.

Here are some questions to help establish vendor credibility:

Ask them what broad swath of machine learning is involved in the product.- e.g. supervised, unsupervised, a certain type of neural net architecture, etc

Ask them the names of the algorithms behind the AI- XGBoost, BERT, whatever

What does the model predict for? The actual prediction output- is it 1/0, yes/no, a number?

Ask them what objective function (also called loss or cost function) the model is optimizing for- what is the error the model is trying to minimize?

What metrics do they use to measure model efficacy? Closely related to objective function question. How do they know how well the model is able to generalize on unseen data?

Ask them how often they change up their model- cadence and reasons why

How much are humans involved in this “AI solution”?- many automation tasks like NER and document OCR that get sold as AI fall somewhere on the continuum of machine learning and mechanical turk. What’s the split?

Bonus and especially relevant question- are they selling “GPT as a service” (GaaS- trademark pending, thank you, I’ll see myself out). This means that their user-facing GUI is a thin wrapper around GPT api and there might not be any added benefit (but added costs) with the service.

To be forewarned is to be forearmed.

Machine Learning is magic. Ask anyone who does it. It's alchemy. There’s going to be some great AI products coming out, but also some stinkers, and some AI “wolf in sheep’s clothing”.

Ask questions. Don’t be left obfuscated by nomenclature. Wait a second…Phew!- those are some big words there in that last sentence! I’m exhausted from sounding so smart- see what I mean? Don't be bullied or confused by technical sounding terms in AI. Every big AI word can be decomposed into an intuitive explanation. Don’t get duped. Keep going. You can do it. Don’t slow down.

https://en.wikipedia.org/wiki/AI_effect

So you are misusing the term yourself : any kind of software is AI, or even any kind of machine. (That's why the term AI is best to be avoided altogether, except maybe in psychology - think "Turing test".)

Artificial neural networks implemented on software/hardware have been around since 1958, though they didn't have much success... maybe except in research and video games (already in 1970s-1980s) ? Most of game AI today *still* is "if-then" statements, as well as countless AI expert systems.

Machine learning is at least as old, and does not necessarily involve neural networks. Its successes are also older than AlphaGo : https://arstechnica.com/gaming/2011/01/skynet-meets-the-swarm-how-the-berkeley-overmind-won-the-2010-starcraft-ai-competition/ (And this is a *late* example.)

(Of course using humans "inside" your machine and claiming it to be "AI" is on a whole other level of "fraud".)