Disclaimer: The views and opinions expressed in this blog are entirely my own and do not necessarily reflect the views of my current or any previous employer. This blog may also contain links to other websites or resources. I am not responsible for the content on those external sites or any changes that may occur after the publication of my posts.

End Disclaimer

“Fear is the mind killer” -Frank Herbert

“Contentment is the career killer” -Otakar

“I will either find a way or make one.” -Hannibal

News

AIML

Generative AI Is Challenging a 234-Year-Old Law

Google Mired in Controversy Over AI Chatbot Push

Microsoft Introduces 1-Bit LLM

Tyler Perry Halts $800M Studio Expansion, Citing Concerns Over OpenAI’s Sora

Predictive Human Preference: From Model Ranking to Model Routing

Why Google is Pausing Gemini's Ability to Generate Images

Deepfakes Will Break Democracy

How Selective Forgetting Can Help AI Learn Better

Fine-tuning a large language model on Kaggle Notebooks

Humanoid robots draw millions from Bezos, OpenAI and more

A.I. Frenzy Complicates Efforts to Keep Power-Hungry Data Sites Green

Painting

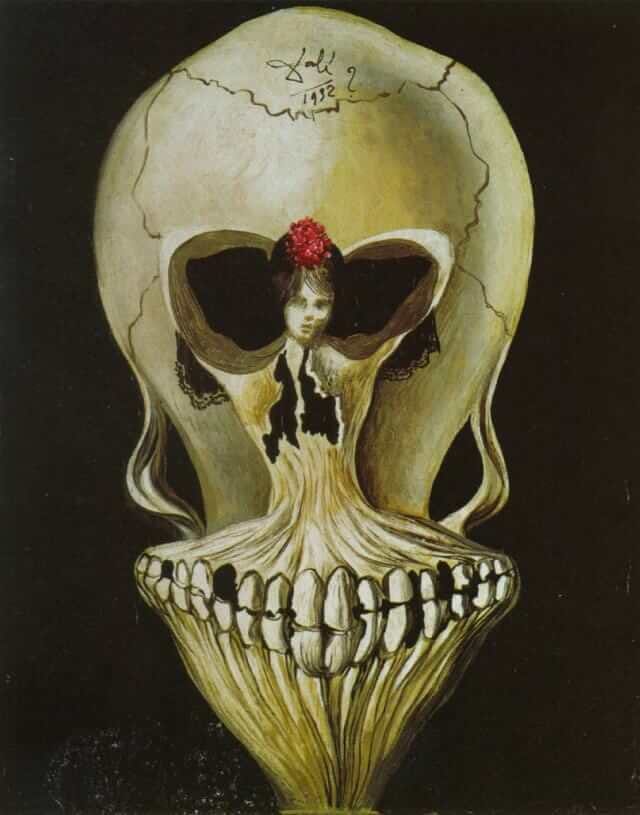

Ballerina in a Death's Head, (Ballerine en tête de mort), 1939 by Salvador Dali, from a private Swiss collection

Crypto

US judge halts government effort to monitor crypto mining energy use

Cyber

'Everybody Is Just Scrambling': Nationwide Cyber Attack Delays Bay Area Pharmacy Orders

Markets

Charlie Munger – The Architect of Berkshire Hathaway

‘The Architect of Berkshire Hathaway:’ Warren Buffett Honors Charlie Munger

Apple to Wind Down Electric Car Effort After Decadelong Odyssey

30 Chefs Open Up About Tipping, Gen Z Cooks and You the Customer

Nvidia Hardware Is Eating the World

Are We in a Productivity Boom? For Clues, Look to 1994.

What a Major Solar Storm Could Do to Our Planet

Once the darling of the EV world, the electric truck-maker Rivian is reeling

Median Down Payment for a House by US State

Misc

“Dune” and the Delicate Art of Making Fictional Languages

A tech billionaire is quietly buying up land in Hawaii. No one knows why

The 100 Most Valuable Sports Teams in the World (2023)

Peter Thiel’s $100,000 Offer to Skip College Is More Popular Than Ever

Inside Nirvana’s last ever show

$1 Billion Donation Will Provide Free Tuition at a Bronx Medical School

The longest-living people in the world all abide by the ‘Power 9’ rule

Paper

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

Reducing to 1 bit from 1.58 bits in the context of Large Language Models (LLMs), specifically BitNet b1.58, involves the following:

Quantization to Ternary Values: Parameters in the LLM are quantized to three possible values (-1, 0, 1), instead of the full precision (e.g., FP16 or BF16) used in traditional LLMs.

Efficiency Gains: This reduction significantly improves cost-effectiveness in terms of latency, memory usage, throughput, and energy consumption while maintaining comparable model performance.

New Scaling Law: The 1.58-bit approach defines a new scaling law for training LLMs that are both high-performance and cost-effective.

Hardware Optimization: Encourages the development of specific hardware optimized for 1-bit LLMs, enhancing computation and energy efficiency.

Improved Modeling Capability: The inclusion of 0 allows for explicit support for feature filtering, which can significantly improve performance.

Comparable Performance: Experiments show that BitNet b1.58 can match the perplexity and end-task performance of full precision models starting from a certain model size, utilizing the same configuration.

Do Large Language Models Latently Perform Multi-Hop Reasoning?

The document explores Large Language Models' (LLMs) latent multi-hop reasoning capabilities through complex prompts, focusing on the ability to recall and utilize interconnected pieces of information. Key findings include:

Evidence of first-hop reasoning is substantial, indicating LLMs can recall a key entity based on the prompt.

Second-hop reasoning, where LLMs use the recalled entity to complete a prompt, shows moderate evidence.

The study introduces the TWOHOPFACT dataset and novel metrics (internal entity recall and consistency scores) to assess LLMs' reasoning abilities.

Findings suggest a scaling effect where larger models improve first-hop reasoning but not necessarily second-hop reasoning.

The study reveals that the utilization of latent multi-hop reasoning pathways is highly contextual and varies across different types of prompts.