Disclaimer: The views and opinions expressed in this blog are entirely my own and do not necessarily reflect the views of my current or any previous employer. This blog may also contain links to other websites or resources. I am not responsible for the content on those external sites or any changes that may occur after the publication of my posts.

End Disclaimer

“Never interrupt your enemy when he is making a mistake” -Napoleon Bonaparte

News

AIML

AI is Coming for Wall Street Jobs

New bill would force AI companies to reveal use of copyrighted art

TSMC boss says one-trillion transistor GPU is possible by early 2030s

Evidence that LLMs are reaching a point of diminishing returns — and what that might mean

Anyone got a contact at OpenAI. They have a spider problem.

Chronon, Airbnb’s ML Feature Platform, Is Now Open Source

How I Built an AI-Powered, Self-Running Propaganda Machine for $105

How Tech Giants Cut Corners to Harvest Data for A.I.

Painting

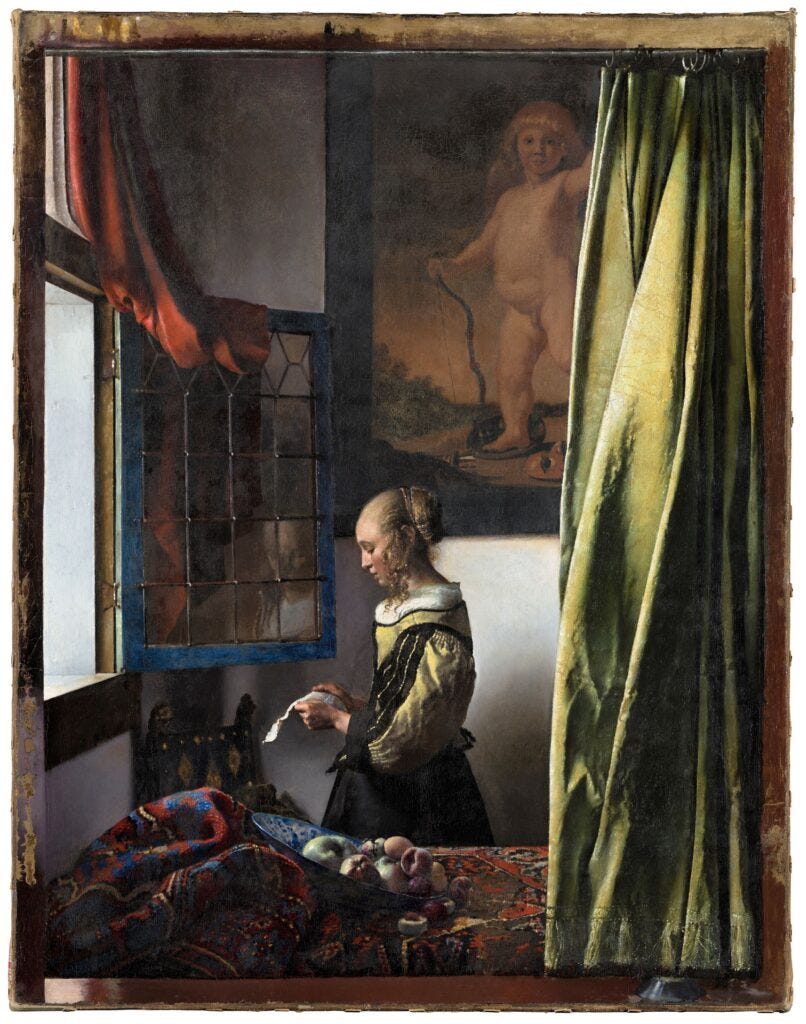

Before Restoration:

Girl Reading a Letter 1657-59, Johannes Vermeer (1632–1675), Oil on Canvas, 83 cm × 64.5 cm (33 in × 25.4 in)

After Restoration:

A Hidden Image Revealed Behind Vermeer's Girl Reading a Letter at an Open Window

The Mysterious Cupid and Johannes Vermeer’s Paintings

Cyber

Why CISA is Warning CISOs About a Breach at Sisense

Markets

Billionaire Ken Griffin hasn't written to Citadel investors in years. Read what he has to say now.

When Will Elon Musk’s Driverless Car Claims Have Credibility?

15 Stocks That Have Destroyed the Most Wealth Over the Past Decade

What Makes Housing So Expensive?

The U.S. Urgently Needs a Bigger Grid. Here’s a Fast Solution.

Misc

A Secret Code May Have Been Hiding in Classical Music for 200 Years

Two Bay Area railway workers charged for building secret apartments inside train stations

Pacific castaways’ ‘HELP’ sign sparks US rescue mission

How a Reality TV Show Turned the U.F.C. From Pariah to Juggernaut

'The greatest card ever assembled': Inside the making of UFC 300

Paper

RHO-1: Not All Tokens Are What You Need

Selective Training: The paper introduces a novel approach to training language models called Selective Language Modeling (SLM), where only tokens deemed useful are trained on, rather than all tokens in the training data.

Token Evaluation: Tokens are evaluated based on a reference model, and only those with high excess loss are used for training, which effectively filters out unhelpful or noisy data.

Efficiency and Effectiveness: Results show that this method not only speeds up training by reducing the number of tokens processed (up to 10x faster) but also improves model performance significantly on tasks such as mathematical problem solving.

Practical Implications: The approach suggests a potential shift in how training data is handled, focusing on quality and relevance rather than quantity, which could lead to more efficient use of computational resources and better performing models.