Erroneous Experts, AI Primordial Ooze, and Willy Wonka

12 Observations from my working on Artificial Intelligence projects in 2024

Disclaimer: The views and opinions expressed in this blog are entirely my own and do not necessarily reflect the views of my current or any previous employer. This blog may also contain links to other websites or resources. I am not responsible for the content on those external sites or any changes that may occur after the publication of my posts.

End Disclaimer

12 observations from working with AI in 2024.

Big picture-ish, mostly non-technical, all with a delicious hard candy-coated shell.

Using LLMs still seem like magic to me

I have a pretty good understanding of what goes on behind the scenes with LLMs in terms of making the sausage output coming out the other side.

I still can’t believe how cool LLMs are or what they are capable of doing.

Once words are tokenized(chunked), the model works with multiple numerical representations (IDs, vectors, probabilities) before eventually converting back to text at the very end.

All the user sees is text.

I use Anthropic’s Claude every single day as a tutor and assistant to explain things to me intuitively.

Never gets tired, always on call, instantaneous responses, doesn’t give snarky answers like Stack Overflow does.

I can ask it about any subject I can think of and go down the rabbit holes as deep as I can manage.

This is available and accessible to almost anyone with a phone or a computer and internet.

That’s pretty magical.

Despite the magic show, LLMs are naive

LLMs can return answers that give the illusion that they are thinking and creating output from a world view, but LLMs have no conception of the whole.

They’re programmatically predicting the statistically most likely next single word.

The understanding is statistical, rather than semantic.

Pattern recognition, not comprehension.

Still a long way to go.

Still amazing, still in the early innings.

Ground truth “answer sheets” are an imperative component of LLM projects

LLMs are stochastic, and even more stochastic if you are turning up the temperature (still a badly named inference time/sampling hyperparameter).

For use cases like data extraction, summarization, and comparison - we prioritize for a combination of End-to-End Accuracy- overall correctness of extracted data, and Information Extraction F1 Score- a balance of precision and recall. There isn't a single universal metric- it often depends on the specific extraction task and requirements.

Just as with discriminative supervised machine learning AI, the model needs to be trained (in this case post-trained or calibrated) to a set of ground truth labels.

This takes time and is tedious, but it’s been absolutely critical in every LLM project I’ve worked on this year.

How do you build those and what’s the most effective way? Sorry - secret sauce cooked only for my current employer.

No one cares what you built with Gen AI

If you are building products for others to use…

No one cares what you built before with cool discriminative AI, and no one cares now what you built with Gen AI and LLMs.

All the same rules apply.

Build the thing.

Sell the thing.

Two distinct phases with distinct considerations for each.

In some respects the building of the thing is more straightforward than the selling of the thing.

The average user at most companies does not need appreciate all the architecture abstracted away behind the scenes .

What they do tend to appreciate are the end products that remove friction and the mundane, rote, parts of their jobs that they hate.

Agentic AI / AI Agents is the new “nobody knows what it means” AI term

“Agentic AI is the future”.

What is agentic AI? I’m still not really sure.

I’ve been pitched Agentic AI that was if-then statements (always!), agentic ai that was llms with embedded functions to do things like write code, and agentic ai as Matryoshka dolls with some level of llms orchestrating the agency and functionality.

I see agentic AI as the nested Matryoshka llms- which I believe can provide real utility and are the focus, directionally, for the near future.

Right now I have yet to see agentic systems that are, as Ilya Sutskever has said, “agents in any real sense”. What we have now are “light” or “thin agents”.

Same as it ever was, like “AI”, “Big Data”, and “the cloud” before it, people will use the term “agentic ai” for whatever suits their purposes.

The “AI Experts” are still really far apart in what they think is going to happen next

The names you’ve heard in the press and online are still miles apart on topics such as scaling laws, the future of inference scaling and more generally the timing, likelihood, or definition of AGI.

Opinions come from your biases, the knowledge you have on the subject, guesses, and in this case probably have to do with what shop you are working at and a bit of talking up your own book.

Lot’s of “Experts” (big ‘E’) will get the future wrong.

I don’t know what’s going to happen next, and the majority of “AI Experts” don’t know either.

There’s plenty to do right now.

If the technology stopped advancing right now, there would still be projects to do for years

LLM growth is slowing down! We are reaching scaling limits! Oh nooooo!

If technology were to freeze or if there were suddenly, oh I don’t know, a moratorium, there would be at least 3 - 5 years worth of projects to tackle given the current state of llms and generative AI.

Generative AI can be transformative and a huge bubble

Generative AI has plenty of applications right now that can help companies transform the way they work and increase their efficiency.

Companies are spending tons on capex chasing AI which leads to expectations to deliver on that expenditure. Many companies won’t be able to deliver on these expectations.

These two things can be happening at the same time and still be somewhat mutually exclusive.

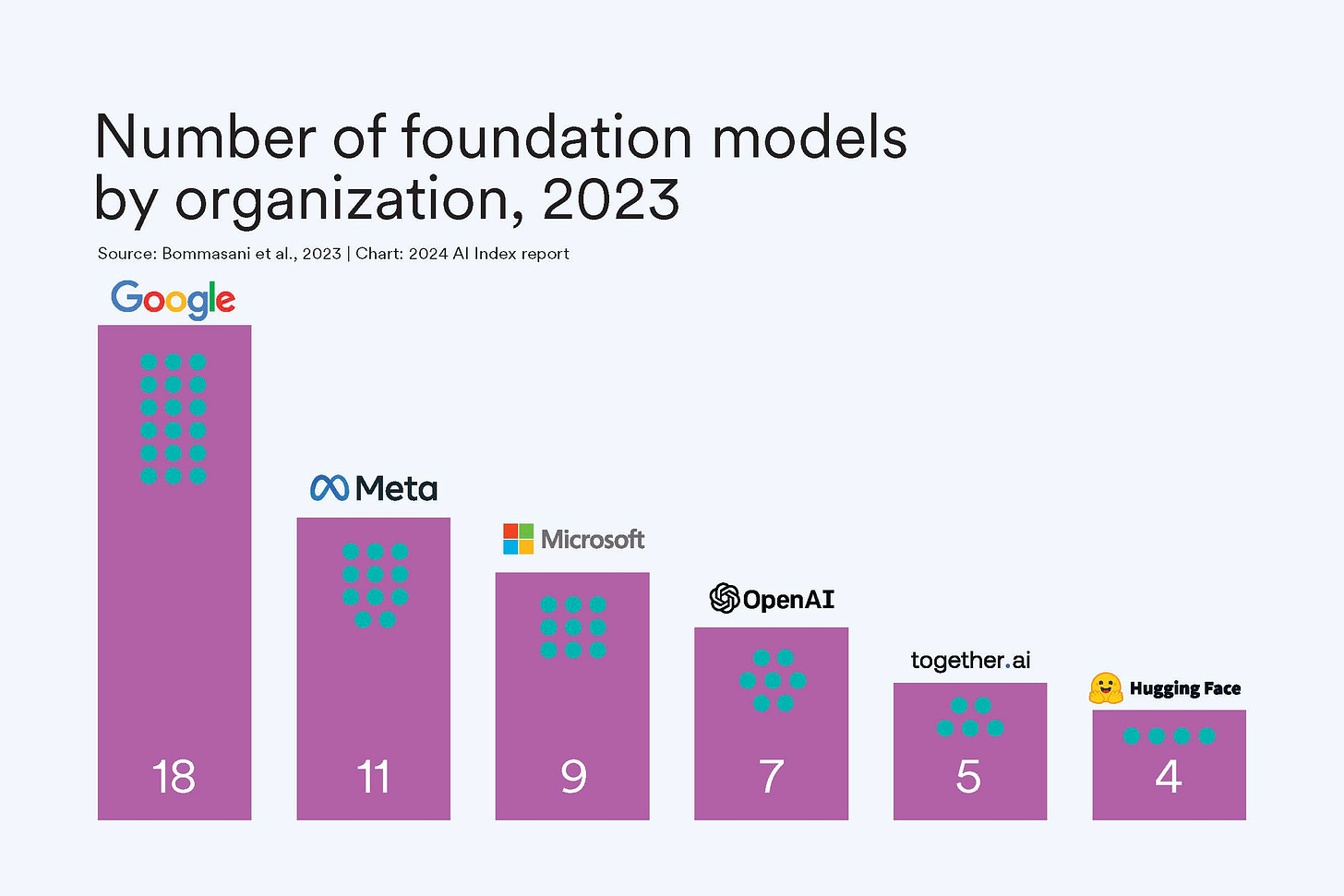

LLMs have already become commodities

There are 5-6 or so companies building multiple iterations of foundational large language models. They all sort of train them the same way. At this point it’s a bit hard to noticeably differentiate them apart from some wrinkles.

Fear of Missing Out is unhelpful when building a strong structural AI

I’ve heard multiple anecdotes this year of people saying “I need an AI something” to present to my C-Suite.

Give it to me now.

So places spend money to buy or build someAIthing without a clearly defined ROI or a team that knows how to use it.

And most places don’t have the clean data in place to begin to use AI in the first place.

Pick a silo with enough data —> clean the data —> apply use case —> apply algorithms

Temper the FOMO.

It’s not helpful for the long run or for occupational longevity.

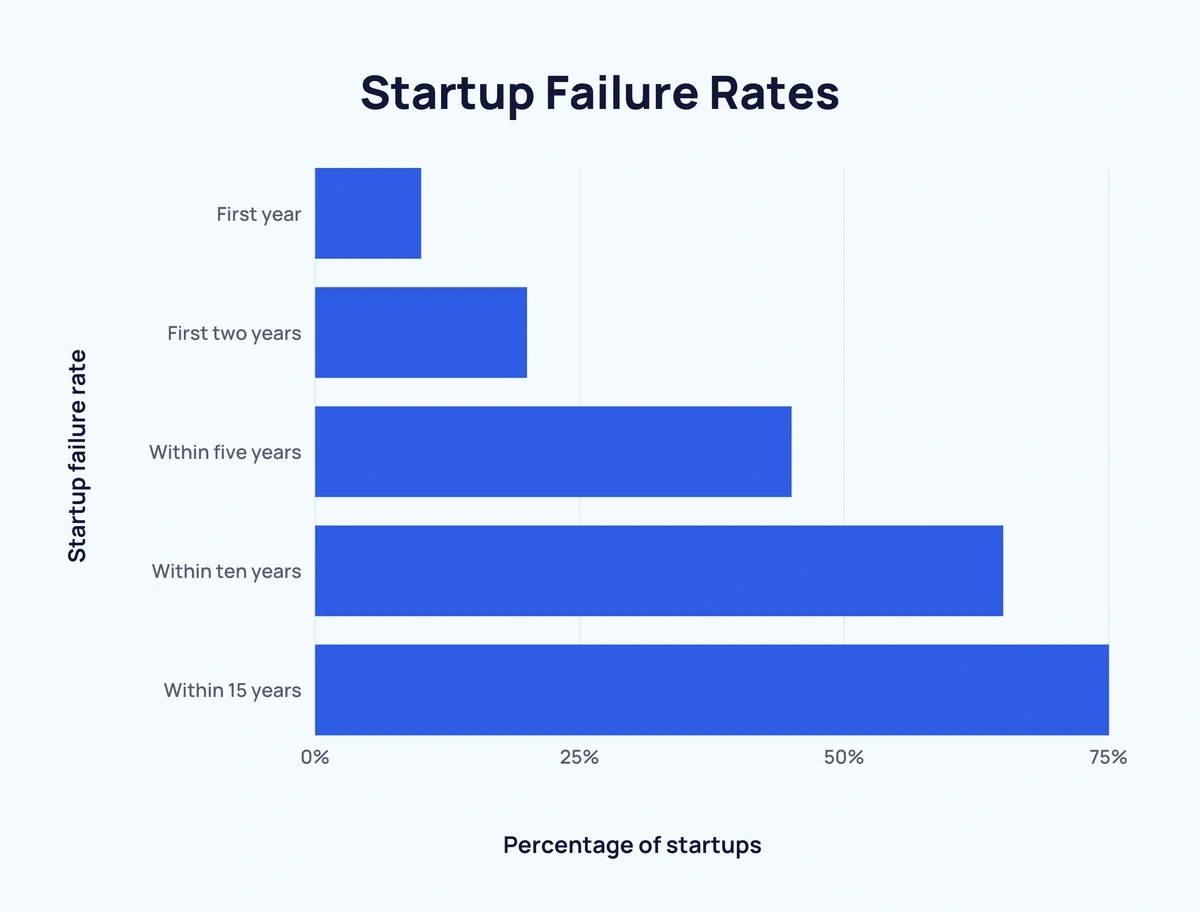

Every internal AI team at a company is a tech startup

Buy vs. build, internal team vs outsourced team, CAIO or not, winging it or having at least someone on staff to decipher the output - all these decisions points make success for AI at your company increasingly path dependent.

Many places will try.

Many places will fail.

It’s not easy.

Strive for concrete, small wins.

Take some snow, make a snowball, and get it rolling down a steep snow-covered hill.

As always, but especially in burgeoning tech times, there are a massive amount of fakers

There have always been those people in AI, but they’ve come out of the woodwork since shortly after GPT burst into the zeitgeist.

Still, I’m a bit surprised that I’ve heard self-professed “AI experts” (small ‘e’ this time) speak at conferences mixing up nomenclature on broad topics.

But maybe I’m going to the wrong conferences…

Remain vigilant.

Sequoia Cap predicated that 2024 would be a year of “AI primordial soup”. I think that’s an apt description- waiting to see which things would learn to crawl, pull themselves out of the muck, and stagger to stand on firm legs.

Now let’s see where our newly formed, proto-life form AI creatures take us next.

There’s so much to do.

Don’t slow down